Table of Contents

Using SGE

As discussed briefly here, SGE is the job scheduler you use on the cluster to get your work done.

A job is basically a task you want to perform, generally a script that you want to run to do your analysis. It's the term used by the scheduler for your submission to its queue.

qlogin - For Interactive Jobs

An interactive job (aka interactive session, or qlogin session) is one where you want to see a terminal and type and execute commands interactively, or where you want to run a GUI-based app like Matlab or FSL. To run an interactive job, use qlogin, and you'll see something like this:

[mgstauff@chead ~]$ qlogin

Your job 27653 ("QLOGIN") has been submitted

waiting for interactive job to be scheduled …

Your interactive job 27653 has been successfully scheduled.

Establishing /opt/gridengine/bin/rocks-qlogin.sh session to host compute-0-10.local …

...

[mgstauff@compute-0-10 ~]$

The mgstauff@chead on the first line shows the user was logged on as user mgstauff on server chead. After running qlogin the user is now logged on to server compute-0-10, which is one of the compute nodes. Do whatever you need to do, run a script, run Matlab, etc.

Your home directory is the same on chead as on the compute nodes, i.e. it's shared between them. The applications are still in /share/apps, and data is still in /data.

When you're done, type exit to end the interactive sessions.

Ending an interactive session

This is IMPORTANT! When you're done, end the interactive session by typing exit and you'll get back to the front end login (i.e. chead). If you do not end the interactive session, it will tie up resources on the system, which means you or someone else will have less chance to get their work done.

Ending an 'orphaned' interactive session

If you have an interactive session that's running and want to end it, but you can't get to the computer or shell where you started it, you can login to the front end again, find the job using qstat and kill it using qdel (see below).

Only run interactive (i.e. qlogin) sessions when really necessary

Because interactive sessions (i.e. qlogin) tie up a full computing 'slot' (see below) or more while they're used, and can easily be forgotten about or otherwise left idle, they should only be used when you have to. That is, use them only when you cannot run your work using just a script.

For example, if you login interactively and run Matlab to simply run a script in Matlab that takes a few hours to complete, this will likely waste resources. Because you are unlikely to be around right when the Matlab job finishes, the computing resources will be tied up by your qlogin session until you can get back to your session and manually log out. Instead, use qsub (see below) to run a simple script that calls matlab and runs your matlab script via the matlab command line interface.

For related details, see here.

Limits

Number of sessions

Currently each user can use five interactive/qlogin slots at once. So if you request the default single slot for your qlogin sessions, you can run 5 at the same time. Or you can run a 2-slot session and a 3-slot session at the same time, etc. This limit may be changed in the future, depending on how this limit works out for everyone.

Duration of sessions

Interactive sessions are currently set to be automatically killed after 48 hours. This is done to avoid the common scenario where someone doesn't log out when they're done, and forgets to ever close the session.

If you need to run an interactive session for longer than 48 hours (e.g. for a long debugging session in Matlab), there is a qlogin queue that allows sessions to run for 7 days. Please contact the sysadmin about this.

qsub - Batch/Script jobs

The most common way to use SGE is to run batch jobs via qsub. This is the non-interactive method.

qsub allows you to submit a job defined by a script (a file that holds a series of commands - basically a program), and the job scheduler will place your job in the job queue, to be run either immediately or when resources open up.

You run a batch job like so, where myjobscript is the name of a script that holds some commands. For example, it could be a BASH script, or a PERL script.

[mgstauff@chead ~]$ qsub myjobscript

Your job 27657 ("myjobscript") has been submitted

Here's an example BASH script that could be in the file named myjobscript (you can cut-n-paste into a text editor on the cluster to try it yourself):

//#!/bin/bash echo I am a job running now on $HOSTNAME ZZZ=5 echo Sleeping for $ZZZ... sleep $ZZZ echo NSLOTS: $NSLOTS echo All Done.

Output from your job

Your script should be setup to save your image or data output files as you normally would, i.e. typically in your /data/<xyz>/<username> directory somewhere in your project tree.

But what happens to the terminal output of your script? That is, the text or error messages your script normally generates and shows on the screen when you run it from the command line? This output is saved to special files for each job in the job's working directory. They look like this:

[mgstauff@chead ~]$ ls myjobscript.* myjob.e27657 myjob.o27657

The file names have the structure <jobname>.[e|o]<job-ID>.

The o file is for 'standard output', the linux term for text that shows up on your terminal from your script.

The e file is for 'standard error', the linux term for error messages from your script (by default they show up in your terminal window, but can be directed to locations separately from standard output).

Or use the -j y option to qsub to join them into a single file.

The path for these files can be changed using the -o and -e options to qsub. Run man qsub for details or check online.

The status of your job

To find out what's happening with your job(s), use the qstat command:

[mgstauff@chead ~]$ qstat job-ID prior name user state submit/start at queue slots ja-task-ID ---------------------------------------------------------------------------------------------------- 27657 0.55 myjob mgstauff r 05/01/2014 17:50:47 all.q@comput... 1

Running qstat without any options will show all of your currently running jobs.

The job-ID column shows the job id of the job, which you use to reference the job for any commands you may need to run later on the job (e.g. to delete it if necessary).

The state column shows the state of the job. These are the most common states:

rfor runningqwfor queued and waitingEfor error (or,Eqw)

In this case, the state is r, so our job is running.

The queue column shows you which queue your job is running “in”. Different queues have different purposes and resource limits. In this case, our job is running in the default all.q queue for batch jobs. It also shows which compute node the job is running on (truncated in the above example).

The slots column shows how many slots your job is using. Each slot corresponds to a single processor core on the compute node. Parallel-processing (aka multi-threaded) jobs will show 2 or more slots here.

The ja-task-ID column is empty for regular batch jobs. For job array jobs, in which more than one tasks (sub-jobs) are launched from a single call to qsub, this column shows the task ID, i.e. the sub-job number. These are discussed elsewhere.

If you have no jobs running or queued, qstat will have no output.

When jobs fail

If your job fails because of an error with the sge system itself, you'll see it listed via qstat, but its state will Eqw. The job stays in the queue until you delete it so you are sure to notice it failed somehow. Check the error output of the job (the .e file) to (hopefully!) figure out what happened.

More details about your job

To get more details about your job, run

qstat -j <jobid>

where <jobid> is the job-ID you get from calling qstat.

Using basic.q queue for qsub jobs

By default when you use qsub, your job uses the SGE queue named all.q, which uses the newer set of compute nodes. There is another queue called basic.q that uses an older set of compute nodes with less memory (24GB vmem available) and fewer (8) and slower CPU's. Everyone has a slot quota of 16 by default on basic.q. It can be useful to use basic.q even though it's slower, when all.q is very busy and your jobs are sitting in the queue waiting to run, or when you have lots of jobs to run and your slot limit on all.q is reached. Another time to use basic.q would be when you have lots of jobs running on all.q, and need to run some smaller or test jobs, but don't want to use any of your quota on all.q.

To run a job only on basic.q:

qsub -q basic.q myjob-script

To run a job either on all.q or basic.q:

qsub -q all.q,basic.q myjob-script

Submit a job to both queues, like shown above, when you want it to run on whichever queue has space and quota available for you.

For jobs already in the queue

To change the requested queue while a job is waiting in the queue, for example:

qalter -q all.q,basic.q <job-id>

The above command will change job with <job-id> to run on either all.q or basic.q. You might do this when you first submit to all.q only, but then see that the cluster is very busy and want to change this. This can be better than deleting your job and resubmitting to both queues, because if your job's been in the queue for any amount of time, it's been building 'priority' to let it run sooner than more-recently submitted jobs.

Common qsub options

These option are passed on the command line along with your call to qsub, like usual linux commands.

-v variable[=value]

Define and optionally assign (or reassign) an environment variable to be used in the qsub job environment

NOTE Anything you pass via -v will *not* override environmenatl vars that are passed by the -V option (big V). See below.

An example:

qsub -v MY_VAR=123 my-script

-cwd

Tells Sun Grid Engine that the job should be executed in the same directory that qsub was called. Otherwise, the working directory of the job is your home directory. This can be important. This setting effects the location of the output files (for linux standard out and standard error). You may not want output files ending up in your /home dir by default. For example,

[mgstauff@chead project1]$ qsub -cwd myjob

will send the output files to the project1 dir where the command was run, instead of /home/mgstauff.

-j [y|n]

Specifies whether or not the standard error stream of the job is merged into the standard output stream. So instead of your standard and error output going to two separate files (see below), they can go to the same file, like so:

qsub -j y myjob

-m e -M <your-email-address>

Send an email to address when the job ends. For more options AND important caveats, see here

-V

States that the job should have all the same environment variables as the shell executing qsub. If you don't change any environment variables in your front end login instance, then typically you won't need to use this.

NOTE That any environment variables passed this way will override anything assigned by the -v option (lowercase v)

NOTE If you environment contains a define for ITK_GLOBAL_DEFAULT_NUMBER_OF_THREADS, it may interfere with the automatic setup of threading for ITK-based apps (e.g. ANTs and ITK-SNAP).

-b

States that the command being executed could be a single binary executable or a bash script. For example the command 'ls' is a single binary. This option takes a y or n argument indicating either yes the command is a binary or no it is not a binary.

The last argument to qsub is the script/command to be executed.

Setting options directly in your script

qsub options can also be set in your script directly. This is very convenient. Use the #$ symbol at the begin of lines to set qsub options. For example:

#!/bin/sh

# # Make sure that output files arrive in the working directory #$ -cwd # #Merge the standard out and standard error to one file #$ -j y # #Mail me when job ends #$ -M gradstudent@neversleeps.com # echo This is the work part… sleep 30 echo …done napping.

Setting default options for ALL scripts

.sge_requests file

You can create a file $HOME/.sge_request (i.e. in your home directory), and add any options you want to have as defaults for qsub. Put each option on a line by itself. If the file doesn't exist just create a new file.

These options are processed first by SGE, so if you give different settings for the same options either by embedding them in your job script or passing them via the qsub command, these options in the .sge_reqest file will be overridden.

NOTE These options are used for every job you run, so use them carefully.

Example

# Default Requests File # request more memory than the default memory -l h_vmem=5.5G,s_vmem=5.0G # Merge the standard out and standard error to one file -j y # Mail me when job ends -m e -M postdoc@underpaid.com

Email notification

To be notifed about your job when it finishes, use the -M and the -m e options:

qsub -m e -M michael@upenn.edu my-script

This will notify you when your job is ended.

NOTE on delivery problems: A number of email addresses never receive emails from the cluster. Our logs show they leave chead OK, but never make it through the UPHS email relay server. UPHS support hasn't yet been able to sort this out for us. You can try a different address if yours isn't working. upenn.edu domains can be worse than outside domains. gmail addresses seem pretty reliable. If you can't get any of your email addresses to work, let us know.

To test your email address, do this:

echo test | mail -s "test email from chead" <your-email>

To be notified when the job is aborted or suspended or when it begins, change the -m option (see qsub documentation) or here:

‘b’ Mail is sent at the beginning of the job.

‘e’ Mail is sent at the end of the job.

‘a’ Mail is sent when the job is aborted or

rescheduled.

‘s’ Mail is sent when the job is suspended.

‘n’ No mail is sent.

You can combine these options, e.g. -m be.

What if I Change My Job Script After Submitting it via qsub ?

When you submit a job like so

qsub my-script

the SoGE system makes a copy of your script my-script, and uses this copy when it runs your job - whether it runs immediately or if your job sits in the queue for any amount of time. This means that any changes to my-script you make after you submit it as a qsub job, will not have an effect on the job. However, note that any other scripts or files called or referenced by my-script are not copied, so if you change those after submitting a job that references them, the changes affect the job outcome.

Temp directory

IMPORTANT

If your script/job makes use of temporary files and you need to provide a path for them, use the TMPDIR environment variable setup for your qsub job (and for qlogin). This is a temporary directory local to the compute node and unique to your job. It will be much faster than writing temporary files to /data or other remote-mounted devices. Also, it's automatically deleted when your job ends, so keeps the /tmp dir on your local node clean for other users. If script/application is hard-coded to use /tmp or TMP, it is alright. It will use the local compute node drive, but will take longer to be cleared out of /tmp.

In short, do not write temporary files to your data directory or other mounted devices unless you have to for some special reason. This will slow down the whole system a bit for you, and for everyone.

Deleting jobs

This works for both qsub jobs and qlogin sessions.

If you need to delete or cancel a job, either before it's finished running or while it's still waiting to run, use the qdel command with your job id. Get the job id by running qstat, then delete it:

[mgstauff@chead ~]$ qstat job-ID prior name user state submit/start at queue slots ja-task-ID ------------------------------------------------------------------------------------------------------ 27662 0.55 myjob mgstauff r 05/01/2014 18:41:28 all.q@com... 1

[mgstauff@chead ~]$ qdel 27662 mgstauff has registered the job 27662 for deletion

[mgstauff@chead ~]$ qstat

Once your last running or queued job is deleted, qstat will not show any output.

Matlab and qsub

You may need to run Matlab scripts in a qsub job. It's fairly easy. Remember to do this when what your doing in Matlab is really just a batch job, i.e. you're not interacting with it beyond starting a script running.

Keeping Slots Free for Your Other Jobs

Sometimes you may be running a large set of jobs, e.g. 400 subjects. You can submit them all at once and they will all enter the queue and run as soon as you have slots free in your quota. However you may subsequently want to run some other jobs immediately, without having to wait for all the previous 400 jobs to finish. There are two options in this case:

- Submit your original group of jobs as an Array Job, and use the

-tc Noption, where N is the maximum number of jobs from the Array Job that you want to run at once. So if you're slot quota is 32, and you submit your array job with '-tc 20', then 20 of the array jobs will run at once, leaving 12 slots free for other jobs to run immediately. - From the documentation: -tc max_running_tasks

allow users to limit concurrent array job task execution. Parameter max_running_tasks specifies maximum number of simultaneously running tasks. For example we have running SGE with 10 free slots. We call qsub -t 1-100 -tc 2 jobscript. Then only 2 tasks will be scheduled to run even when 8 slots are free.

- Use the

qaltercommand to place aholdon your queued jobs, submit your new job and wait til it starts running, then remove the hold on your queued jobs. The disadvantage here is that you have to wait until one of your running jobs finishes until your new job starts, and then you have to remember to remove the hold on your queued jobs. Here's the commands:

put a hold on all queued jobs for user mgstauff: qalter -u mgstauff -h u \* release all holds: qalter -u mgstauff -h U \*

Resources (memory, slots/cpu cores)

The SGE job scheduler manages the resources of the cluster, allowing users to run jobs within defined resource limits, called quotas. From the user's perspective, this means it's managing the available computing slots and available memory (RAM). This way, the system's resources are shared more equally, and no one user can dominate and lock up all the resources.

The cluster has 21 compute nodes each with 16 cores and 64GB RAM, for 336 cores, or slots.

The RAID storage is connected via fast 10Gbit ethernet.

Checking your current resource usage

Use the qquota command to check the total resource quota usage of all your running jobs.

You can ignore all the lines that start with limit_slots_to_cores_rqs.

Slots and CPU processing

A slot is equivalent in our setup to a single core on a single CPU on a single compute node. This is the unit of processing power. By default when you run a qsub or qlogin job, your job gets assigned one slot.

Current resource quotas allow each user to use 32 slots at a time for qsub jobs, or 5 slots for qlogin jobs. This mean you can run 32 single-threaded batch jobs at the same time, or any combination of parallel (i.e. multi-threaded) jobs on multiple cores that yields a total of 32 slots in use (see below). If you submit jobs that request more than a total of 32 slots, only those that take a total of 32 slots will run immediately (assuming there are enough available slots), and the rest will be queued and run as your jobs finish and resources are available.

Similarly for qlogin jobs, you can run 5 single-slot qlogin sessions, or fewer multi-slot sessions that take a total of 5 slots.

Checking How Busy the Cluster Is

cfn-resources

The best way to check resources is to run this command:

cfn-resources

to get a list of resources available for all.q, the default SGE queue. To view resources available for other queues, pass that queue name as an argument, e.g.:

cfn-resources himem.q

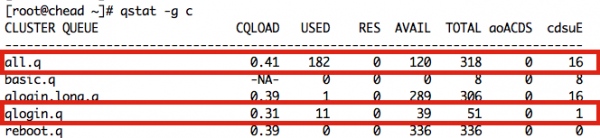

qstat -g c

A lower-level, run qstat -g c. This command shows how many slots are used for each queue. HOWEVER it does not take memory into account. Often a compute node has CPU cores available (slots) but no memory. Use the cfn-resources command for both resources.

See this example:

all.q - this is the queue used for qsub jobs. In the above example, we see there are 182 slots currently in use for qsub jobs in all.q, and 120 available out of 318 total. 16 slots are unavailable due to error (cdsuE column) because one of the nodes is currently down. NOTE: There may be slots available, yet your jobs aren't running even if you haven't hit your usage quota (as shown by qquota). This is because jobs can take more than the default amount of memory, making a node unavailable due to memory limits before all of its slots (cpu cores) are in use.

qlogin.q - this is the queue for qlogin interactive jobs. In the above example, there are 11 slots in use, 39 available out of 51 total. 1 is not available due to an error.

basic.q - in the future this will be the queue for older compute nodes, used by users who have not signed up for high speed slot groups

other queues - can be ignored

qstat -F

A more detailed view of each node, including slot and memory usage, can be seen this way:

qstat -F h_vmem,s_vmem,slots -q all.q

this will show the info for the qsub queue, all.q. Replace all.q with another queue name to see its status

NOTE that the slots info listed here is in fact CPU cores. So it'll show how man cores are available for each machine, along with how much memory. This tells you then if there are cores free and enough memory on any machine to run your jobs.

You can add an alias to make this easier:

alias qsF='qstat -F h_vmem,s_vmem,slots -q all.q | less'

Memory

Default memory limit, qlogin and qsub: 3.0G RAM (allows for vmem usage of Matlab R2014b GUI)

This allows for effective use of each node's RAM when some jobs are run that require higher amounts of memory. Most jobs won't use the default amount of RAM (and this accomodates most of the complications of using virtual memory for memory limits, for you uber geeks out there).

If your job tries to allocate more memory than allowed, it will be killed. Typically you'll see a message like one of these in the job's output or error stream:

Out of memory Insufficient Memory Unable to allocate object Segmentation Fault (if the program doesn't cleanly handle memory limits)

If you're setup for email notifications when jobs finish, you will get an email something like this:

Job 1058662 (example.txt) Aborted Exit Status = 152 Signal = XCPU User = sweisberg Queue = all.q@compute-0-11.local Host = compute-0-11.local Start Time = 06/19/2015 11:41:52 End Time = 06/19/2015 15:15:32 CPU = 03:32:05 Max vmem = 2.492G failed assumedly after job because: job 1058662.1 died through signal XCPU (24)

Note the “Max vmem” line shows 2.492G, just below the default 2.5GB limit. This indicates the job most likely died because it overreached the memory limit.

Note it says the job died through 'signal XCPU (24)' which means a resource limit was reached.

However, you may not see any message that your job was killed because of a memory limitations. There's a glitch in SGE in that it when you hit a memory limit, the SGE system doesn't always catch the fact before the operating system. If the operating system notices first, then your job will be killed such that SGE can't get a message back to you about what happened, and any exception/error handling in the app will most likely not be able to get its message to your output files before the process is terminated. I hope to be able to find a workaround to this in the future.

Java Memory Issues

Java like to allocate lots of RAM. You usually have to limit its memory. Click here for details.

Jobs on chead

If you're running something directly on chead, there are different limits. See here for details.

Requesting more (or less) memory

If you need to allocate more memory for a job, run qsub (or qlogin) with the following options:

qsub -l h_vmem=7.1G,s_vmem=7G my-job-script qlogin -l h_vmem=16.5G,s_vmem=16G

In this example, 7GB of RAM is requsted for the qsub job, 16.5G for the qlogin job. The value for h_vmem should ideally be slightly higher than for s_vmem (s_vmem is a soft limit that gives your job a chance to exit cleanly, whereas h_vmem is a hard limit that uncerimoniously kills your job).

Great Tip

If you know your job needs LESS memory than the default, you can request less using the options above. This may let your jobs run sooner if someone is running memory-intensive jobs that are keeping some nodes from having space for additional jobs that use the default amount of memory.

High-memory needs

See below for a discussion of the high-memory queue for jobs that need more than the default maximum RAM.

How much memory did my job acutally use?

It's helpful to run a job and see how much vmem it actually used once it's done, so you can request only as much as you need.

NOTE: It turns out the maxvmem value described below is not fully reliable. If you job uses more memory in short bursts, the system can miss that and report a lower value for maxvmem than you actually used. So this value can really only be relied on as a minimum.

To get a quick overview of jobs you ran in the past number of days, with easy to read formatting:

qacct -j -u <your-username> -d <#-of-days-back-to-report> | grep -e category -e jobname -e "====" -e maxvmem

to check a particular job, do this:

qacct -j <jobid> -u <your-username>

or to get a list of all your jobs ever run:

qacct -j -u <your-username>

The output near the end will tell you the maximum virtual memory used, and how much you requested. For example

maxvmem 9.962GB arid undefined ar_sub_time undefined category -u mattar -l h_stack=128m,h_vmem=16.1G,s_vmem=16G

In this example, 16GB was requested, but only 10GB used, so you can most likely lower the limit to 10.5G or 11G. This allows you to run more jobs at once, and lets other users run more jobs too. If h_vmem and s_vmem are not listed for category, then you requested the default amount of RAM.

NOTE: check if you have a .sge_request file setup in your home dir (~/.sge_request) that you forgot about, and may be unnecessarily requesting more than the default memory for every job.

Per-job memory limit

There is a limit of 30GB per job at this point for jobs running on the default queue, 'all.q'. See notes on the himem.q queue on this page if your job uses more memory.

NOTE that if you request this much memory, you might have to wait for a node to become free since this means using most of a node's memory resources, and your job might be slowed along with other jobs on the node because memory swap space will most likely be used.

Aggregate memory limit

There is a limit on the total memory requested for all your running jobs.

- Batch (qsub) jobs: the number-of-gigs-available-per-core * number-of-slots-in-your-quota. So the default is 196GB total for batch jobs ( 6GB * 32 )

- Interactive (qlogin) jobs: 61GB

This means, for example, that when you slot limit is 32, you can run 16 qsub jobs @ 12GB each at the same time. Any jobs you submit that request additional memory beyond your current usage will be queued and run later.

High-Memory Jobs (Give me more RAM!) - the himem.q queue

There is a separate queue for high-memory jobs that need more than the 61GB maximum for qsub jobs run in the default all.q, or the 58GB maximum for qlogin jobs.

Currently there is one high-memory node with 384GB of RAM (otherwise, nodes have 64GB RAM).

To use these node for high-memory qsub jobs, use the himem.q queue, for example like so:

qsub -q himem.q -l h_vmem=120G,s_vmem=120G <my-job-script>

or, for qlogin:

qlogin -q qlogin.himem.q -l h_vmem=120G,s_vmem=120G

Memory and slot limits for high-memory queues

The current limit for each high-memory queue is 192GB, both for an individual job and as an aggregate over multiple jobs with each queue. If you have special need for more, please let the admins know.

The aggregate memory use for qsub jobs is combined that for other jobs you may have in all.q. This means if you run a single 192GB qsub job, you won't be able to run any other jobs (unless dynamic quotas have kicked in and increased your quotas temporarily). The high-memory qlogin.himem.q, on the other hand, has a separate aggregate memory limit than for qlogin.q jobs.

Slot limits are aggregated between qsub jobs on both all.q and himem.q, as well as between qlogin sessions on qlogin.q and qlogin.himem.q

NOTE: For more efficient use of our resources, the high-memory nodes are partly used also for regular all.q jobs that use the default amount of memory (~3GB), so slots may not always be available. Please let the admins know if this becomes too much of a problem.

Parallel-processing / Multi-threading

You can request that a job be allowed to run on 2 or more cores (i.e. slots) for parallel-processing. Note of course that your application must be parallel-capable to take advantage of this. If it isn't and you request multiple slots, you'll be wasting your quota and wasting resources for others.

When you request, for example, 3 slots per job, each job will count as 3 slots against your quota, so you'll be able to run fewer jobs at once.

These shell functions are setup to easily start parallel jobs and qlogin sessions:

qsubp2 - 2 cores (think "qsub parallel 2" to get two cores) qsubp3 - 3 cores qsubp4 - 4 cores qsubp5 - 5 cores qsubp6 - 6 cores

qloginp2 - 2 cores qloginp3 - 3 cores qloginp4 - 4 cores qloginp5 - 5 cores

If you need more cores than that (up to 16, but we don't generally recommend requesting more than 12), look at the shell functions (either run declare -f or look in /etc/profile.d/cfn-common.sh) and copy the code. For example, the command looks like this to run qsub with eight cores (qlogin only allows 5 slots anyway so just use qloginp5):

qsub -binding linear:8 -pe unihost 8 <my_qsub_script_name>

These functions include assignment of the 'unihost' parallel environment. This 'pe' will make sure parallel job runs with all threads on the same compute node, which is important for the 'shared-memory' parallel applications we typically run.

Parallel Isn't Always Better!

Many jobs/programs/applications don't take full advantage of multiple cores/threads, e.g. a 2-threaded job might only run 1.5x faster than a single-threaded job. This is mainly because only parts of the program may be multi-threaded. Even for a highly-parallel program and data set, you rarely see full utilization of parallel processing, so a 3-threaded job may be at most 2.5x faster than a single-threaded job.

If you have a lot of jobs to run, it's usually better to run them single-threaded. You'll run more of them at once, and in the end all of them will complete sooner. And when the cluster is busy, you'll spend less time waiting for a compute core with the requested number of cores available. So if you've submitted more jobs than you have slots in your quota, you're better off running them single-threaded.

To modify queued jobs, you can run this command:

qalter -binding linear:1 -pe unihost 1 <jobid>

The exception is if you ask for more memory for your jobs. The memory quota is 6GB / slot. So for example, if each of your jobs asks for 12GB, you'll hit your memory quota before (twice as quickly as) your slot quota. So in that case you'd ask for 2 slots per job to speed things up.

And with a single or small number of jobs, you should have a decent idea of whether it will run faster with multiple cores before you ask for them, especially if you run this kind of job periodically. You can run once with 1 core, then once with 4 cores and compare the time it takes (you can add the 'date' command at the begin and end of your job script). If you ask for a bunch of cores and aren't utilizing them, you're wasting resources for everyone else.

The NSLOTS variable

In your qlogin session or qsub job, the NSLOTS environment variable will be set by SGE to the number of slots you've requested (some scripting in your .bash_profile actually handles qlogin instances).

Use this variable in your scripts/commands if you need to know how many slots are available for threading. The Matlab, MRTrix and ITK apps are setup automatically on the cluster by special handling, see below.

Memory and Parallel-processing

Requesting more than one core does not automatically request more memory. Generally this is good, since you can more easily stay within your memory needs, keeping resources open for other users. Of course you can request more memory for your parallel job, in the same way as for a single-core job.

'Core Binding' and Multi-threading issues

I've set things up to use the qsub/qlogin -binding option by default to try and force jobs to use only the requested number of CPU cores (as determined by SGE 'slots'). The '-binding' option tells the operating system on the compute node to restrict the job/process to use a particular number of processor cores. Without this option, we run the risk of multi-threaded applications spawning threads that then occupy more cores than those assigned to the job by SGE. This is an experiment, as the PICSL cluster did not use this option (but it did have trouble with multi-threaded apps overspilling their assigned cores, which is what we're trying to avoid here), so we may have to modify this going forward - your feedback will be helpful. The binding option should have an added benefit by avoiding thread migration by the operating system. Thread migration can be costly for compute-intensive processes because of cache-misses that happen after migration.

The qlogin command on the frontend is actually a bash function (try env | grep qlogin) that includes '-binding linear:1' to constrain the job to a single core, and the qloginp2, etc, aliases also include the '-binding linear:N' option.

The qsub command is actually a CfN-specific script that checks for binding and other parameters, and sets appropriate defaults before calling the actual SGE qsub command.

More threads than cores - a bad idea

A multi-threaded app may spawn more threads than there are available cores. In conventional cases this is fine since most threads will be lightly used. But in the computationally intensive applications we use for image processing, many of these threads will be meant for heavy computational loads. The risk is that the application sees, e.g., 16 cores available on the compute nodes, so launches 16 worker threads for processing. If the job however is restricted to a single core or just a small number of cores, this many worker threads will typically degrade performance. Each core's cache will be heavily used by each worker thread, making the memory management very inefficient.

So the idea is to limit the number of threads your application will spawn (see below) to either the number of cores/slots assigned to your job, or perhaps 2x the number. Some experimentation may be in order. For example if each thread is working on the same region of image memory, having 2x the worker threads as cores may prove faster.

This issue will be monitored, and your feedback is important.

Limiting threads in ITK-based apps (e.g. ANTs and ITK-SNAP)

The environment variable ITK_GLOBAL_DEFAULT_NUMBER_OF_THREADS will limit the threads in ITK applications.

By default on the cluster, this is set automatically in your login environment to match the number of slots you've requested. Do not change this unless you know what you're doing

To manually set this variable to a different number of threads (only if you have a very good reason to), either:

- export ITK_GLOBAL_DEFAULT_NUMBER_OF_THREADS=2

- pass the -V option to qsub to get the variable into the qsub session environment.

or,

- pass it directly to qsub:

- qsubp2 -v ITK_GLOBAL_DEFAULT_NUMBER_OF_THREADS=2 my-parallel-job

NOTE Because the -V option will pass your environment variables to your qsub sessions, be careful what value you set for ITK_GLOBAL_DEFAULT_NUMBER_OF_THREADS. If it does not match the number of slots you're requesting for qsub, threading will not work properly and performance will decrease.

Limiting threads in OMP-based apps like FSL

The default environment is setup to include

export OMP_NUM_THREADS=${NSLOTS}

export OMP_THREAD_LIMIT=${NSLOTS}

which limits OMP-based apps (like FSL) to use only as many threads as you have slots.

Limiting threads in Matlab

By default on the CfN cluster, Matlab automatically is set to use the number of threads matching the number of slots in your job/session. This happens through a script added to your ~/matlab/startup.m file. If you need to replace the settings in your startup.m file, see Matlab Details and Tips.

Do not change this behavior unless you know what you're doing.

To manually change the number of threads used by Matlab, only if you really know what you're doing, there are currently two methods:

1) Use maxNumCompThreads

Run this Matlab command to limit the number of computational threads for the current session only. This command is in your ~/matlab/startup.m file. *HOWEVER* Matlab doesn't always respect what you set using this command. Your own code should be limited by this command, but multi-threaded matlab internal routines may ignore it and use more threads. This is a known issue with Matalb.

2) -singleCompThread

NOTE This option is passed automatically for you when you start matlab using the 'matlab' command, and are on chead or in a single-core qlogin session or qsub job.

Pass this option when you run matlab to limit all of its computation to a single computational thread, e.g.

matlab -singleCompThread

For more details, see Matlab Details and Tips

Matlab Parallel Toolbox

For considerations, see Matlab Details and Tips

Array Jobs - running large data sets

You may have a large data set that consists of many subjects, each of which needs the same analysis. An Array Job makes this process easier.

Simple Bash Loop

But first, here's a simple way to accomplish something like this, using just a bash script. Copy this text into a file called loopjob

#!/bin/bash # LIST="subjA subjB subjC subjD" for subj in $LIST; do echo Submitting job for $subj qsub myjob_per_subject 5 $subj done

and this text into a file called myjob_per_subject:

#!/bin/bash # FIRST_PARAMETER=$1 SECOND_PARAMETER=$2 echo I got these two parameters: $FIRST_PARAMETER and $SECOND_PARAMETER echo Sleeping for $1 seconds sleep $1 echo Exiting.

Then run chmod u+x loopjob myjob_per_subject to make them executable, then run it via ./loopjob.

You'll see the output in several files like myjob_per_subject.o123.

Running an Array Job

An Array Job is a qsub job that runs 2 or more jobs using the same script. Use the -t option to submit an array job:

qsub -t 1-10 myArrayJobScript

In the above example, qsub will submit 10 jobs, each running myArrayJobScript. The only difference between each run will be the value of the environment variable SGE_TASK_ID in each job's shell environment. You use this variable to differentiate between the different runs of the jobs, as shown in the example script below.

Advantages

Array jobs have some advantages:

- If you pass the

-Moption to qsub to get an email when your job finishes, you'll only get a single email when all sub-jobs (tasks) finish, instead of an email for each sub-job. This is great when you have dozens or hundreds of sub-jobs. - You can delete all sub-jobs at once by running

qdelon the main task-ID. - You can limit the number of slots used by an array job, thereby leaving some slots free in your quota to do other work while the array job runs. See here for details.

Example Array Job

A simple array job script showing use of SGE_TASK_ID. Create a directory in your home directory called testProject, and inside it create a few directories called patient1, patient2, patient3, etc. Copy this script into a file called arrayJobSimple to try it out:

#!/bin/bash # A simple demo array job script # Set the main path to the data DATA=~/testProject # Determine the task ID and assign to patient number # # Display the array task ID from SGE. For each iteration # in the array job, this will change. echo ----- echo SGE_TASK_ID: $SGE_TASK_ID # Use the task ID to set create the patient directory SUBJ=patient$SGE_TASK_ID echo SUBJ: $SUBJ # Change to the patient directory for this current task ID cd $DATA/$SUBJ # Run a script that works on data in the current working directory $DATA/doAnalysis.sh

and put this in a file called doAnalysis.sh:

# demo analysis script echo Running $0 echo -n Working Dir: pwd #sleep for 10 seconds: sleep 10 echo ALL DONE

then run it like this, where N is the number of patient directories you created:

qsub -t 1-N arrayJobSimple

Next, run qstat and you'll see something like this:

job-ID prior name user state submit/start at queue slots ja-task-ID ----------------------------------------------------------------------------------------------------------------- 137330 0.50500 arrayjobsi mgstauff r 10/10/2014 17:08:13 all.q@compute-0-14.local 1 1 137330 0.50500 arrayjobsi mgstauff r 10/10/2014 17:08:13 all.q@compute-0-3.local 1 2 137330 0.50500 arrayjobsi mgstauff r 10/10/2014 17:08:13 all.q@compute-0-5.local 1 3 137330 0.50500 arrayjobsi mgstauff r 10/10/2014 17:08:13 all.q@compute-0-14.local 1 4

This looks like qstat output we've seen above, except note that each job has the same job-id. This is the job-id of your array job. Then note the last column under ja-task-ID. This column shows the 'task ID' for each of the sub jobs that the array job created.

When your job is done, the output is available as usual in .e and .o files, but this time with the task-ID appended to the job id, like so:

[mgstauff@chead ~]$ ls arrayjobsimple* arrayjobsimple arrayjobsimple.e137330.1 arrayjobsimple.e137330.2 arrayjobsimple.e137330.3 arrayjobsimple.e137330.4 arrayjobsimple.o137330.1 arrayjobsimple.o137330.2 arrayjobsimple.o137330.3 arrayjobsimple.o137330.4

Take a look at these files and see how they differ.

Example with List of Subject Names

You may need to use a list of subject names, or similar, instead of being able to directly use the numeric value in SGE_TASK_ID. Here's an example of how to do that, showing details of the steps:

#!/bin/bash

#

ARRAY=(subjA subjB subjC subjD subjE subjF)

echo Contents of ARRAY: ${ARRAY[@]}

LENGTH=${#ARRAY[@]}

echo Num of elements in array: $LENGTH

echo SGE_TASK_ID: $SGE_TASK_ID

# array indecies start at 0, SGE_TASK_ID starts at 1

INDX=`expr $SGE_TASK_ID - 1`;

if [[ $INDX -ge $LENGTH ]]; then

echo Array index greater than number of elements

else

SUBJ=${ARRAY[$INDX]}

echo Calling command for $SUBJ:

# This is where you call your own command...

echo mycommand $SUBJ

# This is just for demo purposes, makes the script pause/sleep for X seconds

echo sleep 15

sleep 15

fi